COMS W4118 Operating Systems I

Virtual Memory

Page Swapping & Faulting

Motivation

Process doesn’t need all of its pages in memory all at once in order to run. Only keep referenced pages in main memory. Keep unreferenced pages on slower, cheaper backing store (e.g. disk).

- Demand paging: only bring pages into main memory when necessary

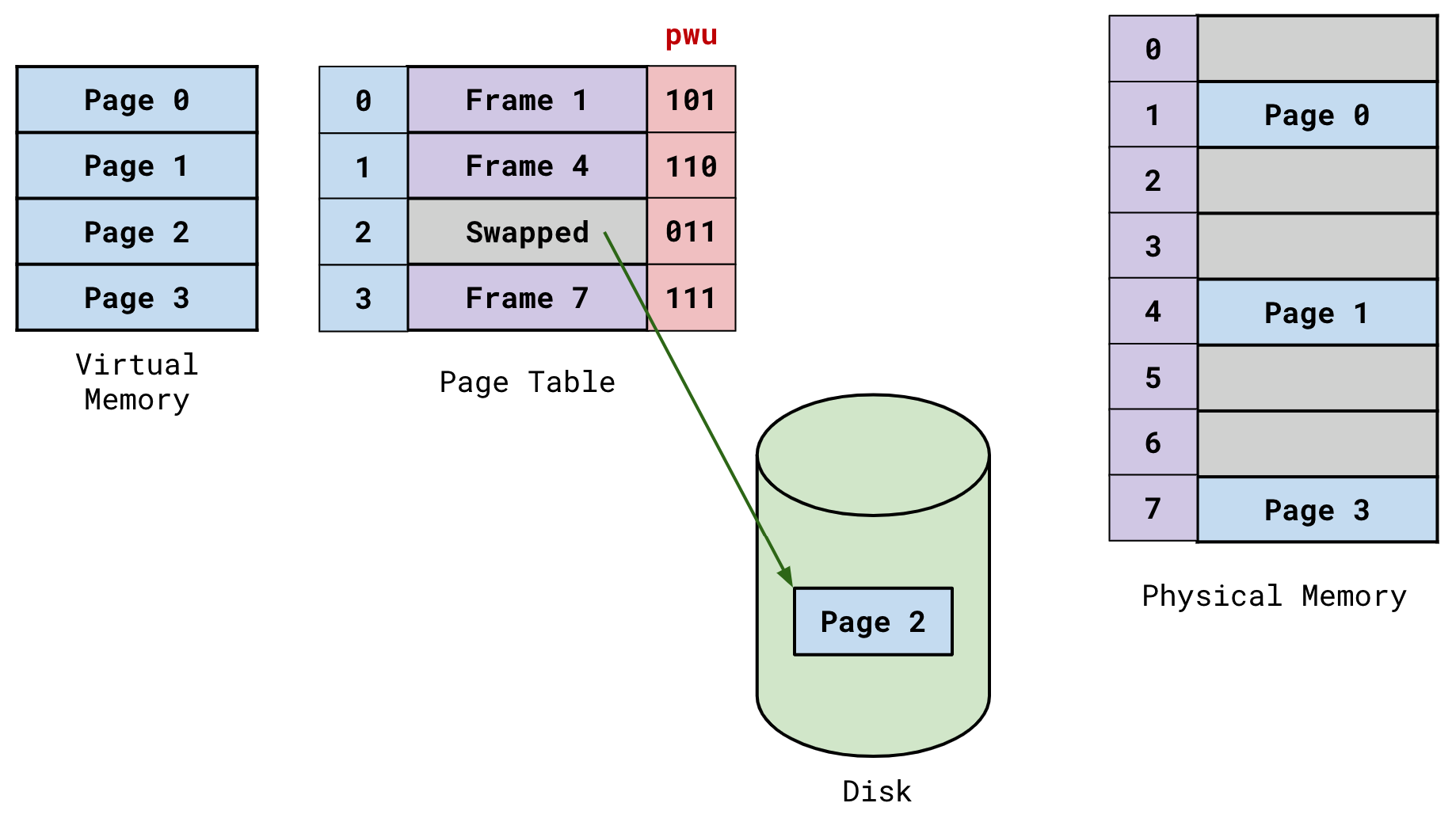

Swapping

When there isn’t enough space in main memory, swap out the page contents to disk so that other page can take its spot in memory. OS updates page table to say that the virtual address to physical address mapping is no longer active (turn off present bit). OS remembers disk address in case process needs to access page again.

Pages are written to a swap partition on disk. When we want to make room for more pages in main memory, write out a “victim” page to the swap partition. When that page is needed again, read is back from the swap partition into main memory (possibly having to evict another victim page).

Note that swapping isn’t too important anymore, modern machines have a lot of RAM.

Page fault

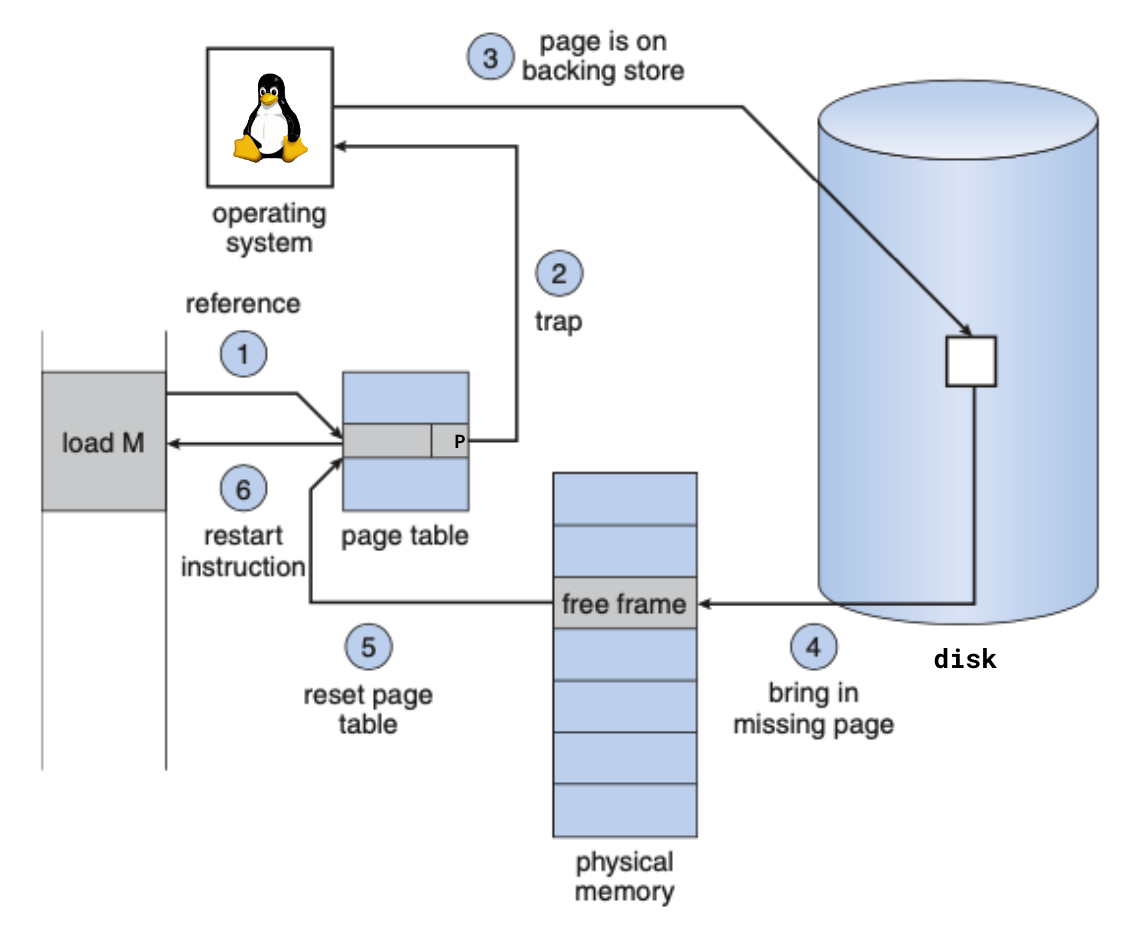

Recall that the hardware performs virtual address translation: lookup PFN in TLB or perform page table walk. What if the page is not present? Need the OS to intervene: raise exception to trap to OS (page fault).

(adapted from OSCE figure 8.6)

Memory access steps:

loadinstruction carried out by MMU- check TLB (not shown)

- TLB miss, consult page tables (only 1-level shown for simplicity)

- present bit off, trap to OS via page fault

- OS determines that page fault is because page was swapped to disk

- issue I/O request

- recall that I/O is slow: put process to sleep in the meantime

- this is why

kmalloc()/copy_to/from_user()could block: might need to fetch referenced pages from disk or swap some pages out to disk to make space

- I/O request satisfied, page written into main memory

- update PTE to point to new PFN, turn on present bit

- restart

loadinstruction- another TLB miss, but this time, page table walk succeeds, install translation into TLB

- alternatively, install translation into TLB after updating page table so that restarted instruction is a TLB hit

OS page fault handler handles exception raised by hardware:

- handle swapped-out pages (diagram above)

- handle illegal access

- user mode accessing kernel space

- write access on read-only region

- either send offending process

SIGSEGVor handle COW

Page Replacement Policies

When no frames in main memory are free to be mapped to a page, need to select a victim page to swap out to disk to make room in main memory. How should we select the victim page?

Page replacement algorithm should minimize # of page faults.

- Evaluation: run algorithm on a reference string of page accesses assuming N frame slots in main memory. Compute number of page faults on that string.

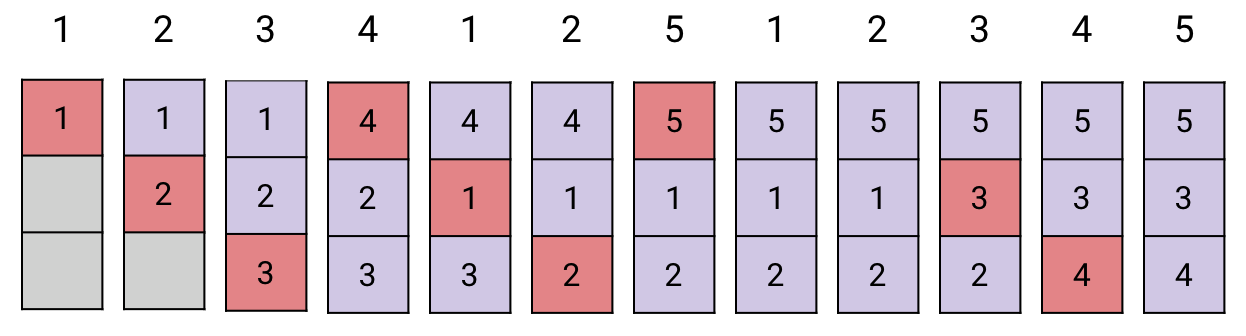

- In all subsequent examples, the reference string is:

1, 2, 3, 4, 1, 2, 5, 1, 2, 3, 4, 5

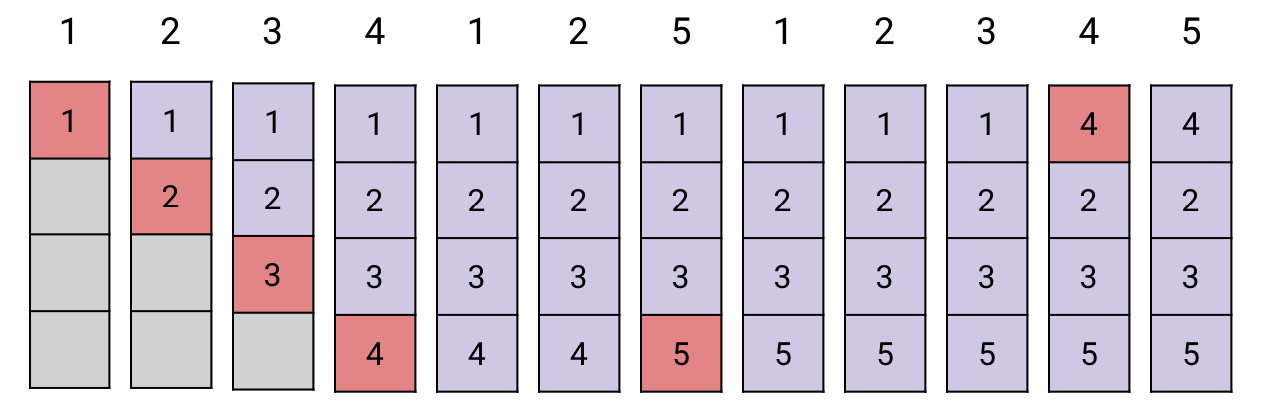

Optimal Page Replacement (OPT/MIN)

Swap out the page that won’t be used for longest time in the future!

Only 6 page faults.

- first 4 were compulsory misses (a.k.a. cold-start). Cache was empty, so page faults had to happen.

Problem: difficult to accurately predict the future..

FIFO Page Replacement

Treat frames as a FIFO queue, swap out the page that was loaded in first

10 page faults!

- access patterns are ignored…

Observation, what if physical memory was smaller (i.e. can only hold 3 frames instead of 4 frames?)

Only 9 page faults!

- Somehow we have MORE page faults when we have MORE physical memory?!

- Belady’s anomaly: FIFO algorithm page fault rate may or may not increase as number of frames increases.

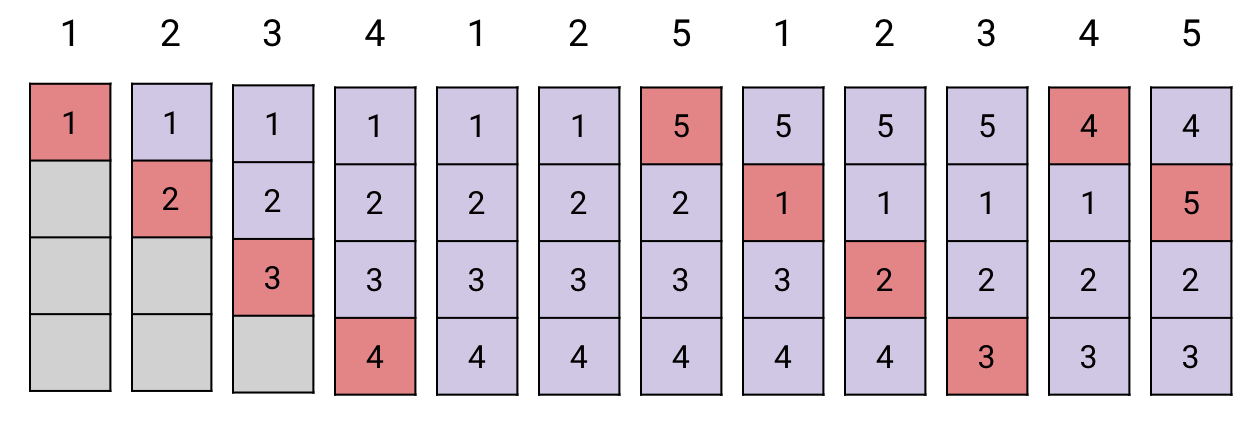

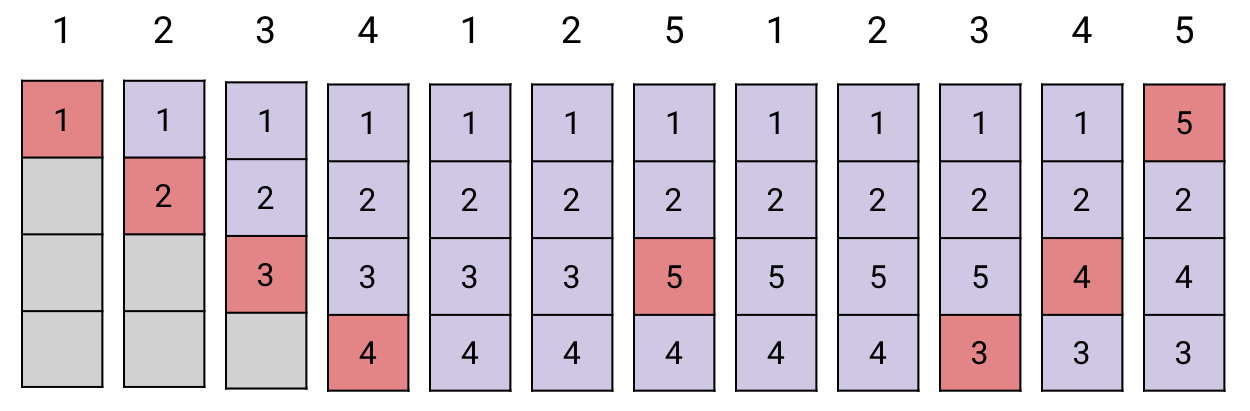

Least-Recently-Used (LRU) Page Replacement

Swap page that hasn’t been used in the longest time. Can use arbitrary tiebreaker (e.g. FIFO).

8 page faults

- taking into account locality, LRU approximates OPT

Attempt 1: LRU Hardware Implementation

- Associate a time counter with each page: each time a page is referenced, save system clock into page time counter

- Page replacement: perform linear scan to find page with oldest time counter

- Can we do better than linear search time?

Attempt 2: LRU Software Implementation

- Maintain a doubly linked list of pages

- Each time page is referenced, move to front of list

- Page replacement: remove page from back of list

- Better than linear scan, but requires several pointer updates..

- High contention on multiprocessor

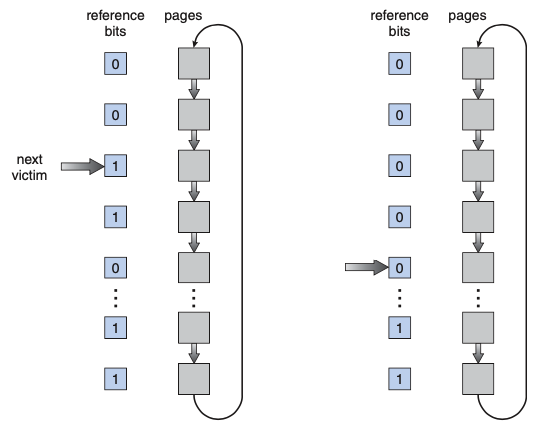

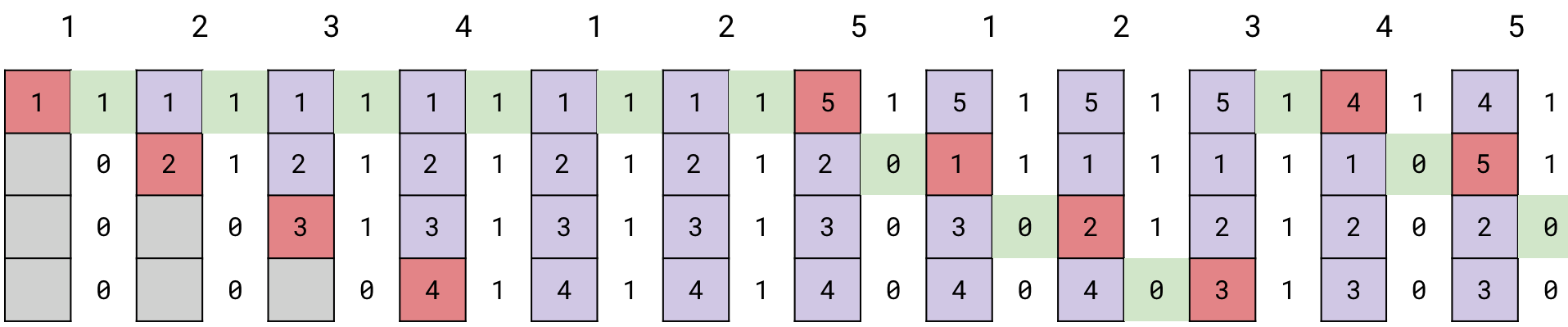

Attempt 3: LRU approximation: Clock/Second-chance algorithm

Let’s approximate LRU: find a not recently accessed page, but not necessarily the least recently accessed

Remove a page that has not been refereced recently.

- maintain reference bit per page

- on memory reference: hardware sets bit to 1

- page replacement: OS finds a page with referenced bit cleared

- over time: OS traverses all pages, clearing reference bits

(adapted from OSCE figure 8.17)

next victim: a cursor over the circular list of pages (i.e. the “clock” hand)

If reference bit is 1, clear it and advance hand. Else, return current page as victim.

10 page faults

- simple to implement, performs reasonably

Dirty pages

Dirty page: content has been modified since reading from disk and should be

written back to disk to keep file in sync (e.g. mmap() a portion of a file with MAP_SHARED)

Clock algorithm doesn’t take into account dirty pages when selecting victim page

- Dirty pages are more expensive to evict, since they need to be written to disk

- Disk write is ~5ms

- Clean pages don’t need to be written to disk, can simply be replaced

Clock algorithm extension: use dirty bit to prevent dirty pages from being evicted

- on page reference

- read: hardware sets reference bit

- write: hardware sets dirty bit

- page replacement policy

- reference=0, dirty=0 -> victim page

- reference=0, dirty=1 -> SKIP

- reference=1, dirty=0 -> clear reference bit

- reference=1, dirty=1 -> clear reference bit

- advance hand, repeat

- if no victim page found, run swap daemon to flush unreferenced dirty pages, which clears dirty bit. repeat.

Page Replacement Summary

Optimal: throw out page that won’t be used for longest time in future

- Best algorithm if we can predict future

- Good for comparison, but not practical

Random: throw out a random page

- Easy to implement

- Works surprisingly well. Why? Avoid worst case

- Cons: random

FIFO: throw out page that was loaded in first

- Easy to implement

- Fair: all pages receive equal residency

- Ignore access pattern

LRU: throw out page that hasn’t been used in longest time

- Past predicts future

- With locality: approximates Optimal

- Simple approximate LRU algorithms exist (Clock)

Alternative Paging Strategies

- Demand paging: load page on page fault

- cold-start: process starts with no pages loaded, incurs page faults

- Request paging: user specifies which pages are needed

- requires users to manage memory by hand, unreasonable

- Pre-paging: load page before it is referenced

- interleave disk I/O with meaningful computation

- spatial locality: bring in adjacent pages

Thrashing

High memory pressure: what if need more pages than can fit into RAM?

- page fault to get page, perform I/O to read from disk

- evict a page, maybe perform I/O to write back to disk

- …but then we might need to evict that page to make room for another page…

Thrashing: system busy just swapping pages

- high page fault rate

- lots of I/O wait time, low CPU utilization

OS needs to make sure dirty pages are periodically written back to disk. Can also take more drastic measures… more on thrashing next lecture.

Last updated: 2023-03-26